Function Calling with OpenAI GPT and Assistants: Extending Your AI's Capabilities

Introduction to Function Calling

Function calling is one of the most powerful features of OpenAI's models, including both GPT models and Assistants, allowing AI to interact with external services and perform actions beyond simple text generation. This capability transforms AI assistants from passive knowledge repositories into active tools that can retrieve real-time information, make calculations, control external systems, and much more.

In this comprehensive guide, we'll explore how function calling works with OpenAI models and Assistants, how to implement it in your applications, and how to easily create AI apps with function calling capabilities using Pmfm.ai.

Understanding Function Calling: How It Works

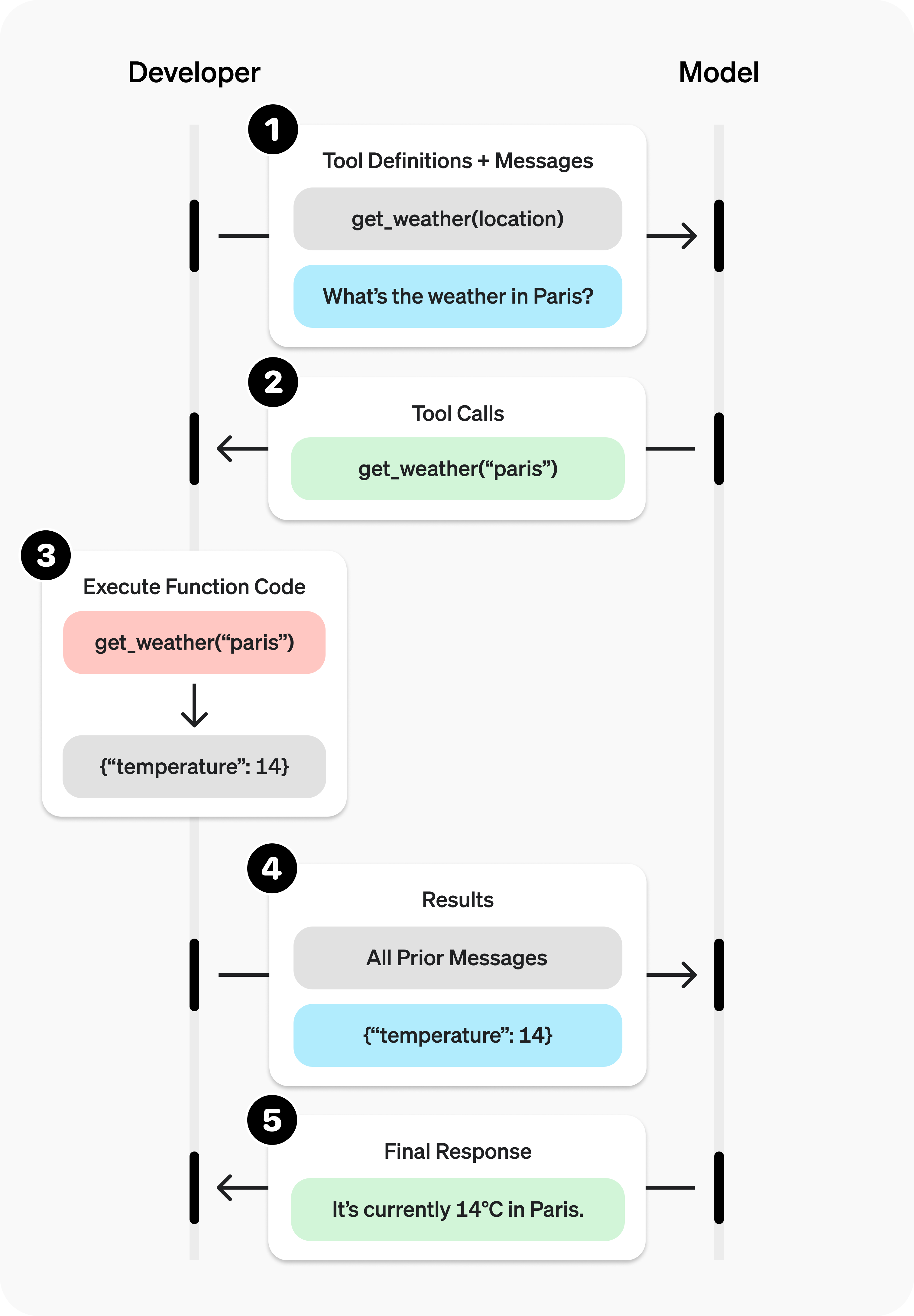

At its core, function calling is a mechanism that allows an AI assistant to request the execution of specific functions when needed to respond to a user query. Here's how the process works:

- Function Definition: You define functions with specific parameters and descriptions that your assistant can call.

- User Input Processing: When a user interacts with the assistant, the AI analyzes the request to determine if it requires external data or actions.

- Function Selection: If needed, the assistant decides which function to call and what parameters to pass.

- Function Execution: Your application executes the requested function with the provided parameters.

- Result Integration: The function result is sent back to the assistant, which incorporates it into its response.

Let's see a concrete example: Imagine a user asks, "What's the weather like in New York today?" Your assistant doesn't inherently know the current weather, but it recognizes this as a weather query. The process unfolds as follows:

Example Flow:

- User: "What's the weather like in New York today?"

- Assistant: (recognizes need for current weather data)

- Assistant calls: get_weather({"location": "New York"})

- Your server: Fetches weather data from a weather API

- Server returns: {"temperature": 72, "condition": "Sunny", "humidity": 45}

- Assistant: "The weather in New York today is sunny with a temperature of 72°F and 45% humidity."

Benefits of Function Calling

Function calling transforms AI assistants from static knowledge bases into dynamic, interactive systems with several key advantages:

- Real-time Information: Access up-to-date data like weather, stock prices, or news.

- Personalized Experiences: Connect to user databases for personalized responses.

- System Integration: Control external systems like home automation or business tools.

- Complex Calculations: Perform specialized calculations beyond the AI's built-in capabilities.

- Data Transformation: Convert data between formats or process images and other media.

Technical Implementation: How to Define Functions

Functions are defined using JSON Schema, which specifies the function name, description, parameters, and their types. Here's an example of a simple weather function definition:

{

"name": "get_weather",

"description": "Get the current weather for a location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA"

},

"unit": {

"type": "string",

"enum": ["celsius", "fahrenheit"],

"description": "The unit of temperature to use",

"default": "fahrenheit"

}

},

"required": ["location"]

}

}

This definition tells the assistant:

- The function is called

get_weather - It retrieves current weather for a location

- It requires a location parameter (a string like "San Francisco, CA")

- It accepts an optional unit parameter (either "celsius" or "fahrenheit")

Implementing Function Calling with Webhooks

The most flexible way to implement function calling is through webhooks - endpoints that your assistant can call when it needs to execute a function. This approach allows for:

- Separation of concerns between AI and business logic

- Independent scaling of AI and function execution

- Flexibility to implement functions in any technology stack

- Security through proper API authentication

For our weather function example, implementing a webhook would involve these key steps:

Webhook Implementation Process:

- Create an API endpoint that accepts POST requests (e.g.,

/api/functions/get_weather) - Parse the incoming request body to extract function parameters (location and unit)

- Call an external weather service API with these parameters

- Process the weather data received from the external service

- Return a properly formatted JSON response with the weather information

- Implement error handling to manage API failures gracefully

This webhook can be implemented in any programming language or framework that supports creating HTTP endpoints. Popular choices include Node.js with Express, Python with Flask or FastAPI, or Java with Spring Boot.

When the OpenAI Assistant determines it needs weather information, it will trigger this webhook, passing the location and unit parameters. The webhook processes the request and returns weather data that the Assistant can then incorporate into its response to the user.

Building AI Apps with Function Calling on pmfm.ai

While implementing function calling from scratch can be complex, platforms like pmfm.ai make it easy to create AI apps with function calling capabilities using both GPT and Assistant models. Here's how you can build an AI app with function calling on pmfm.ai:

Step 1: Create a New AI App

Start by signing up on pmfm.ai and creating a new AI app using either GPT or OpenAI Assistant as the model.

Step 2: Configure Your OpenAI Settings

Enter your OpenAI API key. If using the Assistant model, also provide your Assistant ID. If you don't have an assistant yet, you can create one through the OpenAI dashboard. For GPT models, just select your preferred model.

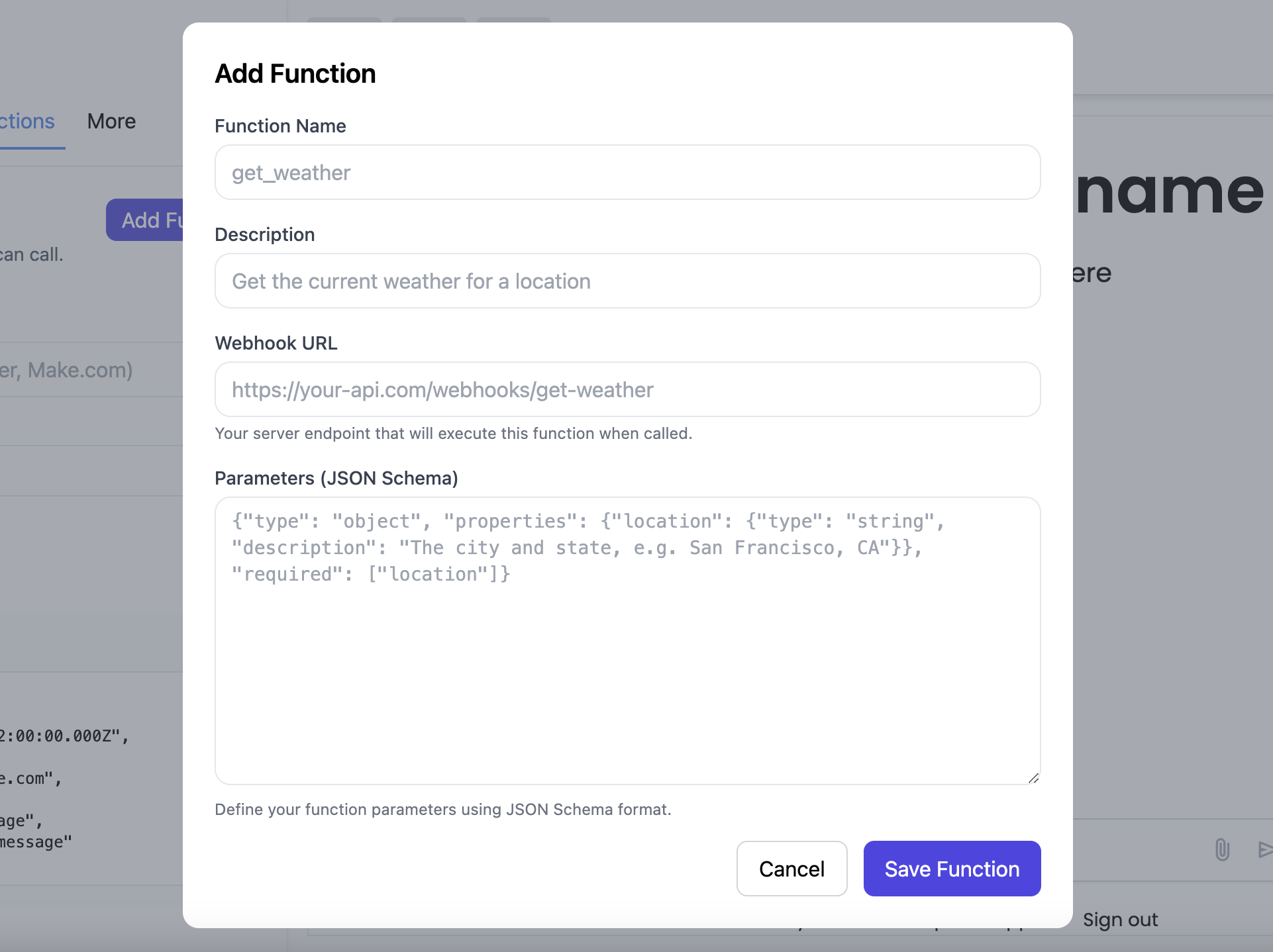

Step 3: Add Functions with Webhook URLs

In the Function Calling section, click "Add Function" to define your functions. This works with both GPT and Assistant models:

- Enter the function name (e.g., "get_weather")

- Provide a clear description

- Specify the webhook URL where the function will be executed

- Define the parameters using JSON Schema

Step 4: Implement Your Webhook Endpoints

Create webhook endpoints on your server to handle function calls. Your webhook should:

- Accept POST requests with JSON parameters

- Execute the requested functionality

- Return results in JSON format

- Include proper error handling

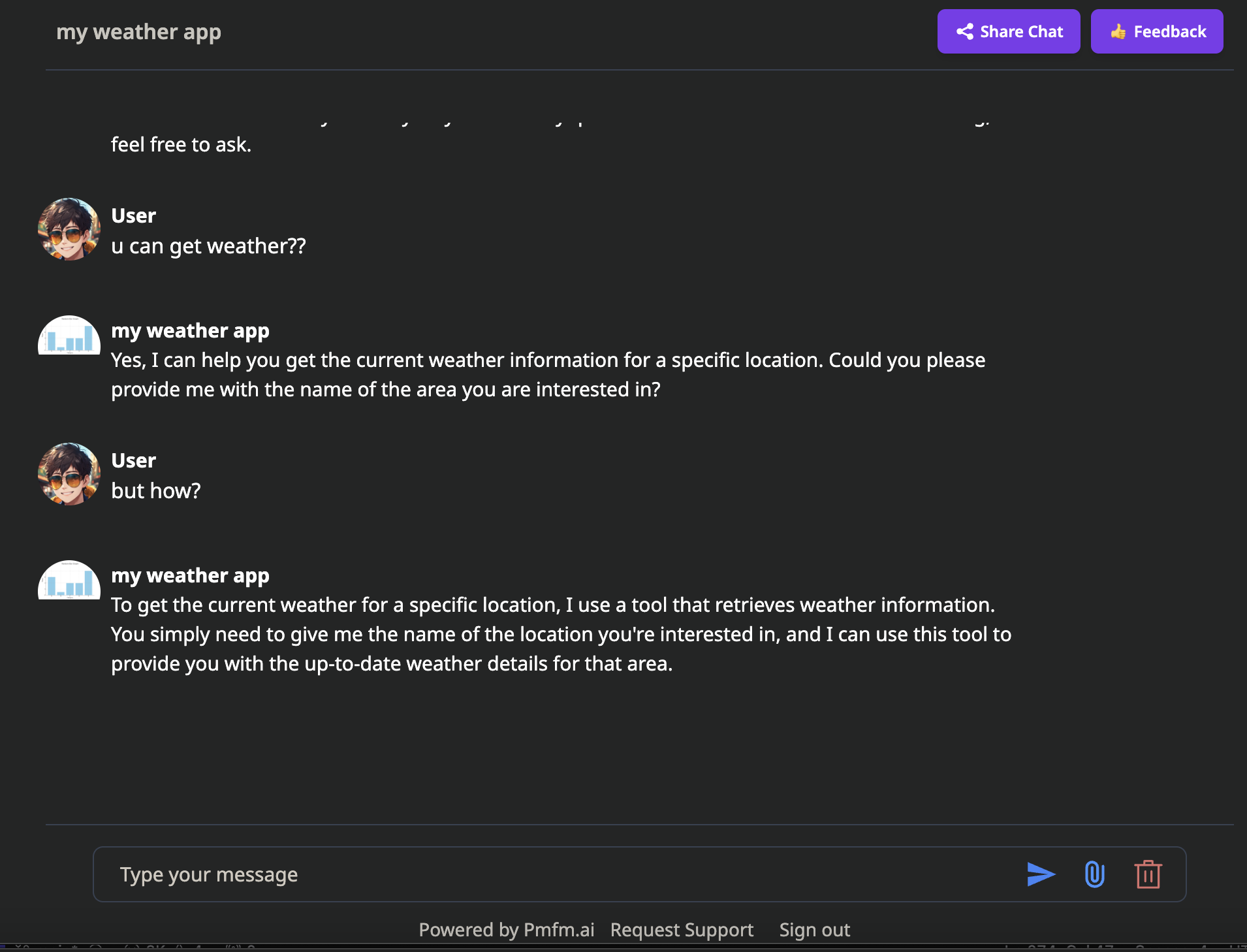

Step 5: Publish and Test Your AI App

After configuring your functions and implementing webhooks, publish your AI app on pmfm.ai. You can then test it by asking questions that would trigger function calls.

Function Calling Best Practices

Whether you're using GPT models or Assistants, follow these best practices to get the most out of function calling:

- Clear Function Descriptions: Provide detailed descriptions so the assistant knows exactly when and how to use each function.

- Parameter Validation: Enforce parameter types and constraints through JSON Schema.

- Error Handling: Implement robust error handling in your webhooks and return meaningful error messages.

- Function Timeout Management: Set appropriate timeouts for function execution to prevent hanging interactions.

- Security: Secure your webhooks with proper authentication and rate limiting.

- Monitoring: Log function calls and responses for debugging and analytics.

Real-World Function Calling Examples

Function calling enables a wide range of applications. Here are some practical examples:

E-commerce Assistant

- search_products(query, filters)

- get_product_details(product_id)

- add_to_cart(product_id, quantity)

- check_order_status(order_id)

Travel Booking Assistant

- search_flights(origin, destination, date)

- search_hotels(location, check_in, check_out)

- get_destination_info(city, country)

- create_booking(flight_id, passenger_details)

Finance Assistant

- get_stock_price(symbol)

- calculate_mortgage(principal, rate, term)

- convert_currency(amount, from_currency, to_currency)

- get_account_balance(account_id)

Productivity Assistant

- create_calendar_event(title, date, time, attendees)

- search_emails(query, date_range)

- send_message(recipient, content)

- create_task(title, due_date, priority)

Conclusion: The Future of AI Is Interactive

Function calling represents a significant evolution in AI capabilities, transforming both GPT models and Assistants from passive knowledge repositories into interactive systems that can take real action in the world. By combining the reasoning capabilities of large language models with the ability to execute specific functions, we can create AI applications that solve real problems and provide tangible value.

With platforms like pmfm.ai, implementing these advanced capabilities is now accessible to developers and businesses of all sizes. Whether you're building a customer service bot, a personal productivity assistant, or a specialized industry tool, function calling can help you create more capable, responsive, and useful AI applications.

Ready to get started? Sign up for pmfm.ai today and build your first AI app with function calling capabilities in minutes!

Ready to Build Your AI App with Function Calling?

Pmfm.ai makes it easy to create, deploy, and monetize AI assistants with powerful function calling capabilities.

Get Started for Free